Biological and Computer Vision

Gabriel Kreiman

Cambridge University Press. 2021. ISBN 9781108649995

Additional Materials

Chapter VII: Neurobiologically plausible computational models

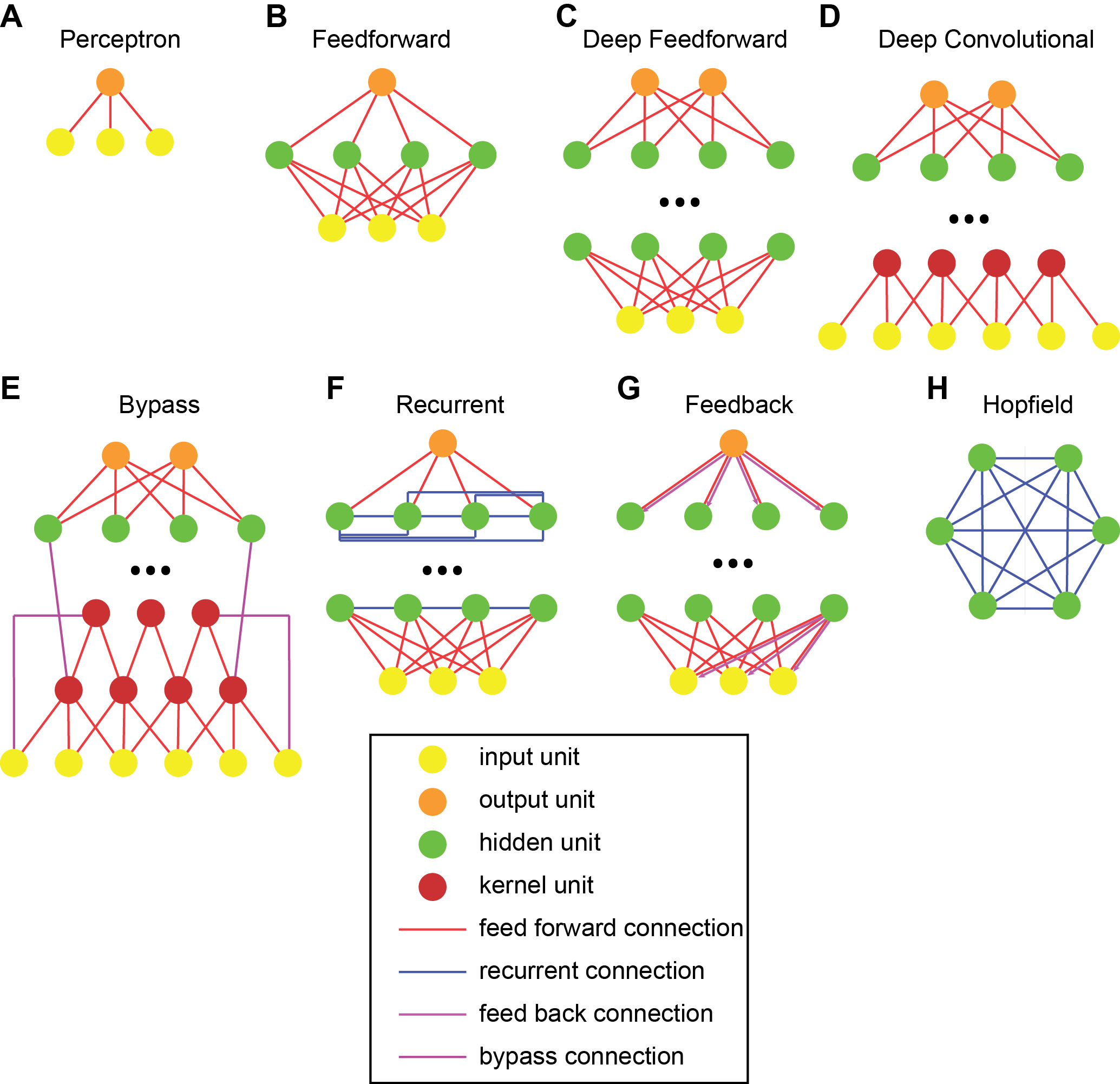

Understanding vision requires quantitative theories instantiated in computational models that can explain the wealth of behavioral and neurophysiological observations. Computational models abstract away aspects of the underlying biophysics to extract fundamental principles of function. The basic atoms of models are units that emulate neurons and the ways to connect those units into neural networks with emergent computational properties. The integrate-and-fire neuron model captures the essence of how neurons combine different outputs and emit spikes when their internal voltage reaches a threshold. Basic elementary computations, including convolution, filtering, pooling, and normalization, are used throughout neural networks. Neural networks are often arranged in semi-hierarchical architectures that include bottom-up connections, horizontal connections, and top-down connections. An exquisite variety of models and functions can be derived by astutely mixing and matching simple elementary operations and connectivity patterns. A classic example is the Hopfield recurrent network, characterized by rich dynamics governed by attractor states. Computational neuroscience efforts pave the way towards a systematic and quantitative understanding of the mechanisms orchestrating visual processing.