You must agree with the terms and conditions specified in this link before downloading any material from the Kreiman lab web site. Downloading any material from the Kreiman Lab web site implies your agreement with this license.

|

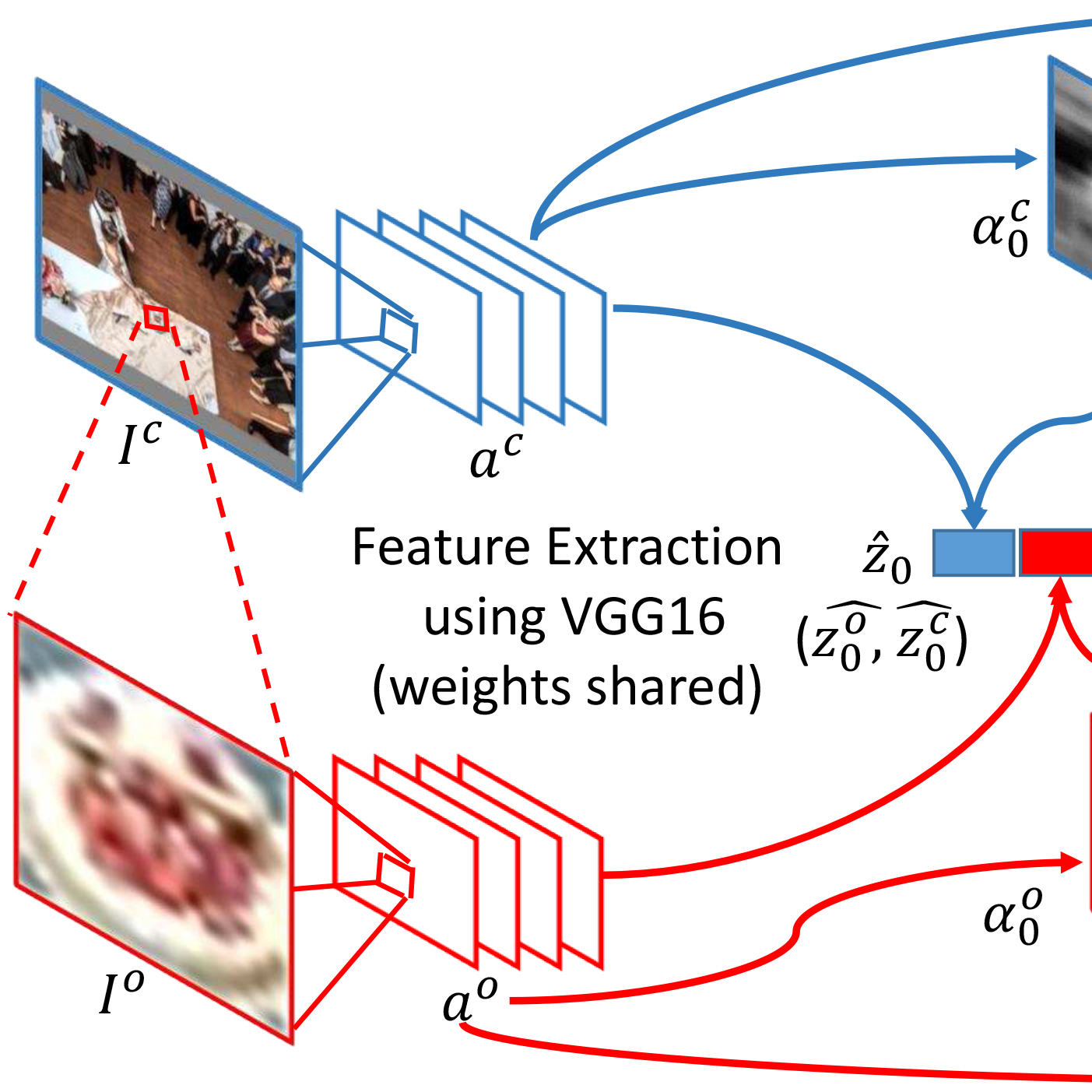

Talbot et al. L-WISE: Boosting human image category learning through model-based image selection and enhancement. International Conference on Learning Representations (ICLR) 2025. PDF Several studies have demonstrated that it is possible to disrupt visual recognition by altering images. It is easier to break things than to build things. Would it be possible to use computational models to enhance visual perception? Here Talbot and colleagues show that computational models of vision can be used to generate images that are easier to recognize. |

|

Casile et al. Neural correlates of minimally recognizable configurations in the human brain. Cell Reports 2025. PDF Casile et al. show that visual recognition of challenging stimuli relies on the dynamic interplay of feedback and feedforward processes between the frontal cortex and ventral visual stream areas. Rapid learning of complex stimuli is reflected by concomitant changes in the ventral visual stream. |

|

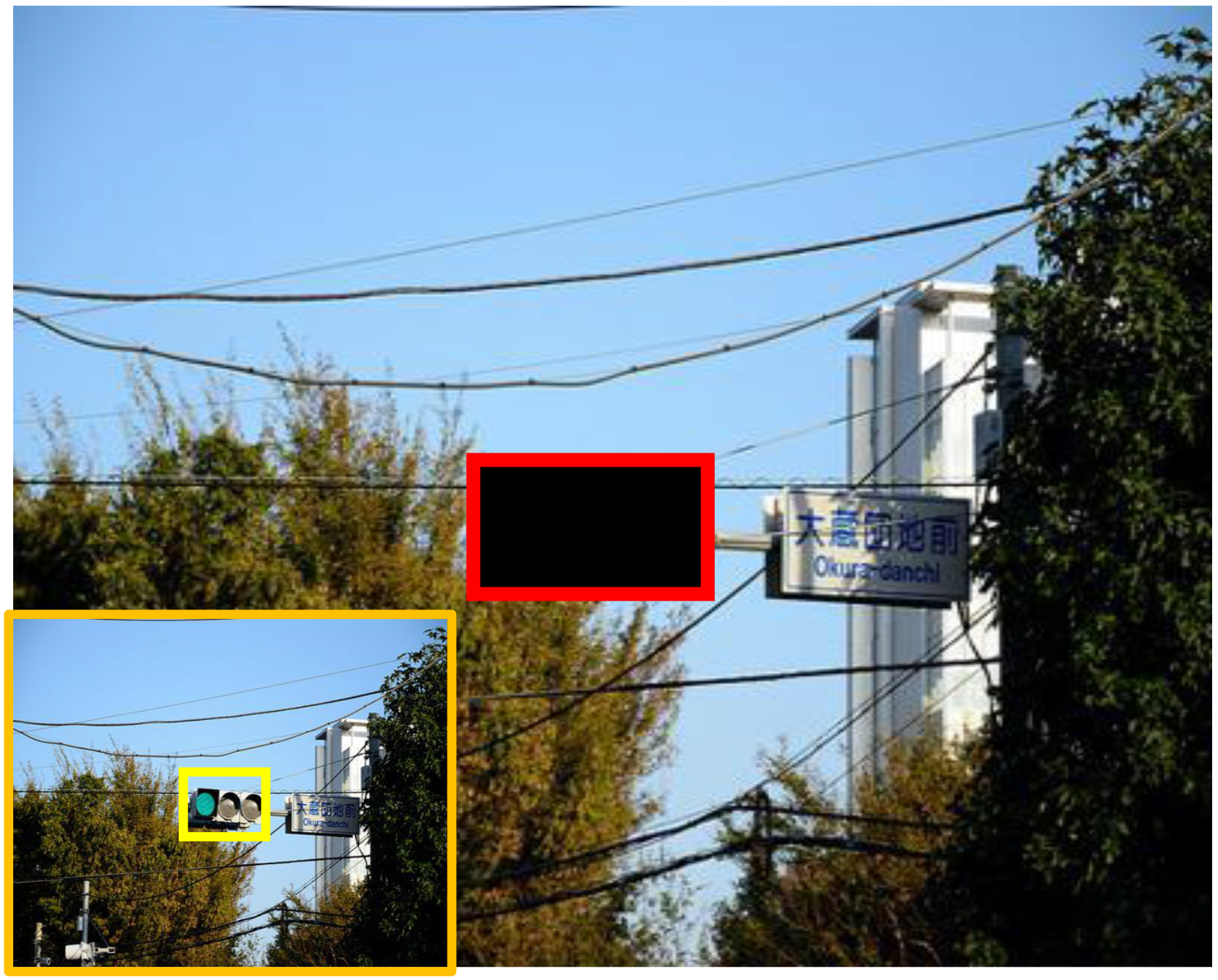

Xiao et al. Feature-selective responses in macaque visual cortex follow eye movements during natural vision. Nature Neuroscience 2024 . PDF Primates move their eyes several times per second. Do visual neurons exhibit classical retinotopic properties during active viewing, anticipate gaze shifts, or mirror the stable quality of perception? We scrutinized neuronal responses in six areas within ventral visual cortex during free viewing of complex scenes. We found limited evidence for feature-selective predictive remapping and no viewing-history integration. Thus, ventral visual neurons represent the world in a predominantly eye-centered reference frame during natural vision. |

|

Li et al. Discovering neural policies to drive behavior by integrating deep reinforcement learning agents with biological neural networks.. Nature Machine Intelligence 2024. PDF We built a hybrid between biological circuits and AI through a closed loop system combining reinforcement learning and optogenetics with the C. elegans worm. The animal plus agent system was able to navigate to targets, find food, and generalize to novel environments including obstacles without retraining. This work opens the door to investigate neuronal circuit function in a novel and unbiased manner and build systems that showcase the benefits of both artificial and biological circuits. |

|

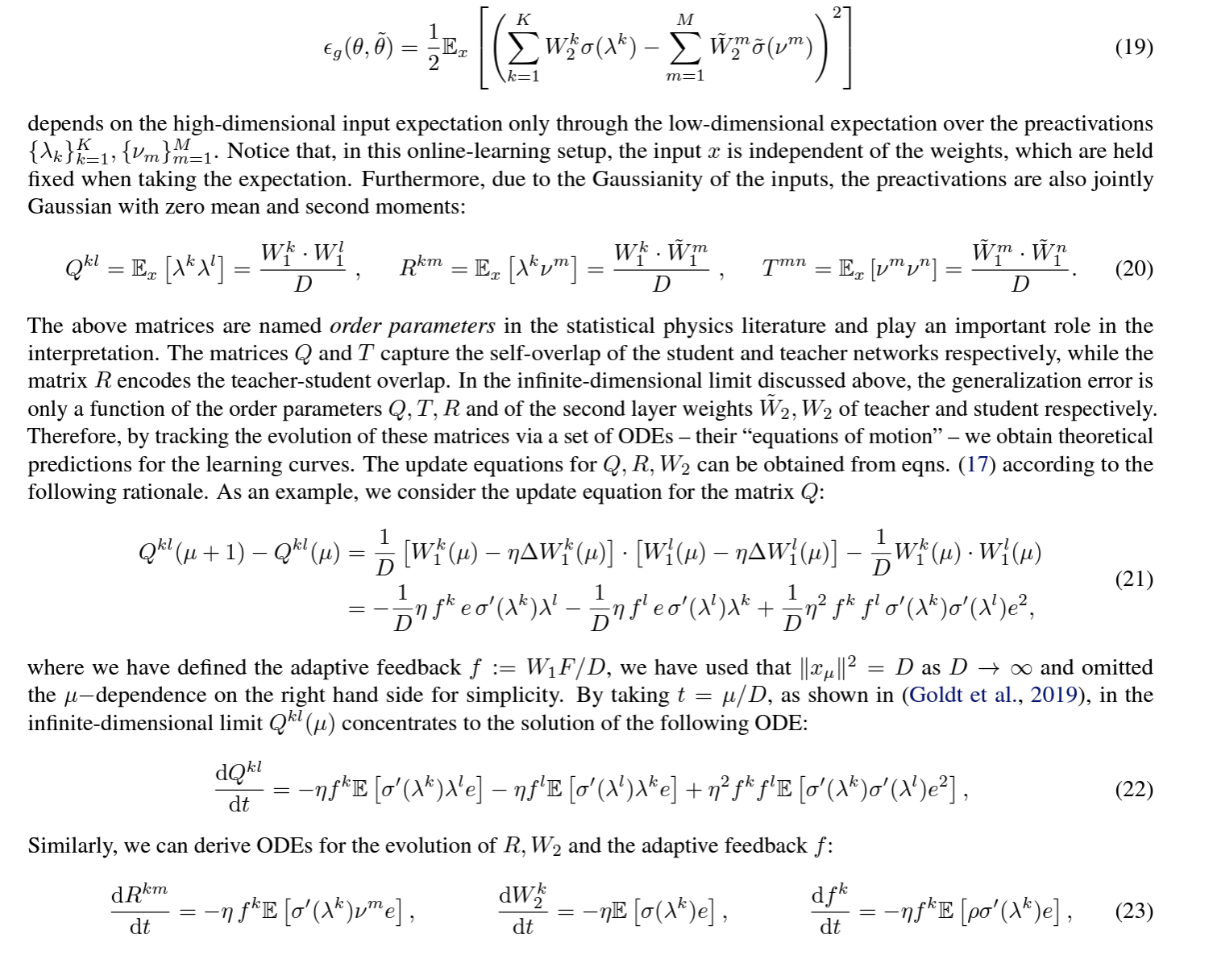

Srinivasan et al. Forward learning with top-down feedback: empirical and analytical characterization. International Conference on Learning Representations (ICLR) 2024. PDF Ever since backpropagation was proposed, critics highlighted its biological implausibility. This study combines analytical tools and simulations to examine the properties of "forward-only" algorihtms such as PEPITA as an alternative to backpropagation. This work introduces analytical understanding of the dynamics underlying learning in PEPITA and makes progress towards bridging the gap between PEPITA and backpropagation in deep networks. |

|

Zheng et al. Theta phase precession supports memory formation and retrieval of naturalistic experience in humans. Nature Human Behavior 2024. PDF The authors evaluated the timing of action potentials with respect to the ongoing local field potential signals in a task where participants watched short movie clips and later reported on their memories about the clips. The data showed that there is a consistent precession of the spike times starting after movie boundaries. |

|

Madan et al. Benchmarking out-of-distribution generalization capabilities of DNN-based encoding models for the ventral visual cortex. NeurIPS 2024. PDF Several studies use neural network models with a large number of free parameters to fit neuronal responses to visual stimuli. How well do such fitting procedures generalize to new data? Madan and colleagues show that these models do not generalize well, interpolating to similar images but failing to capture neuronal responses to images that are different from those in the training set. The study also provides the largest dataset of neuronal recordings from macaque IT recordings to date. |

|

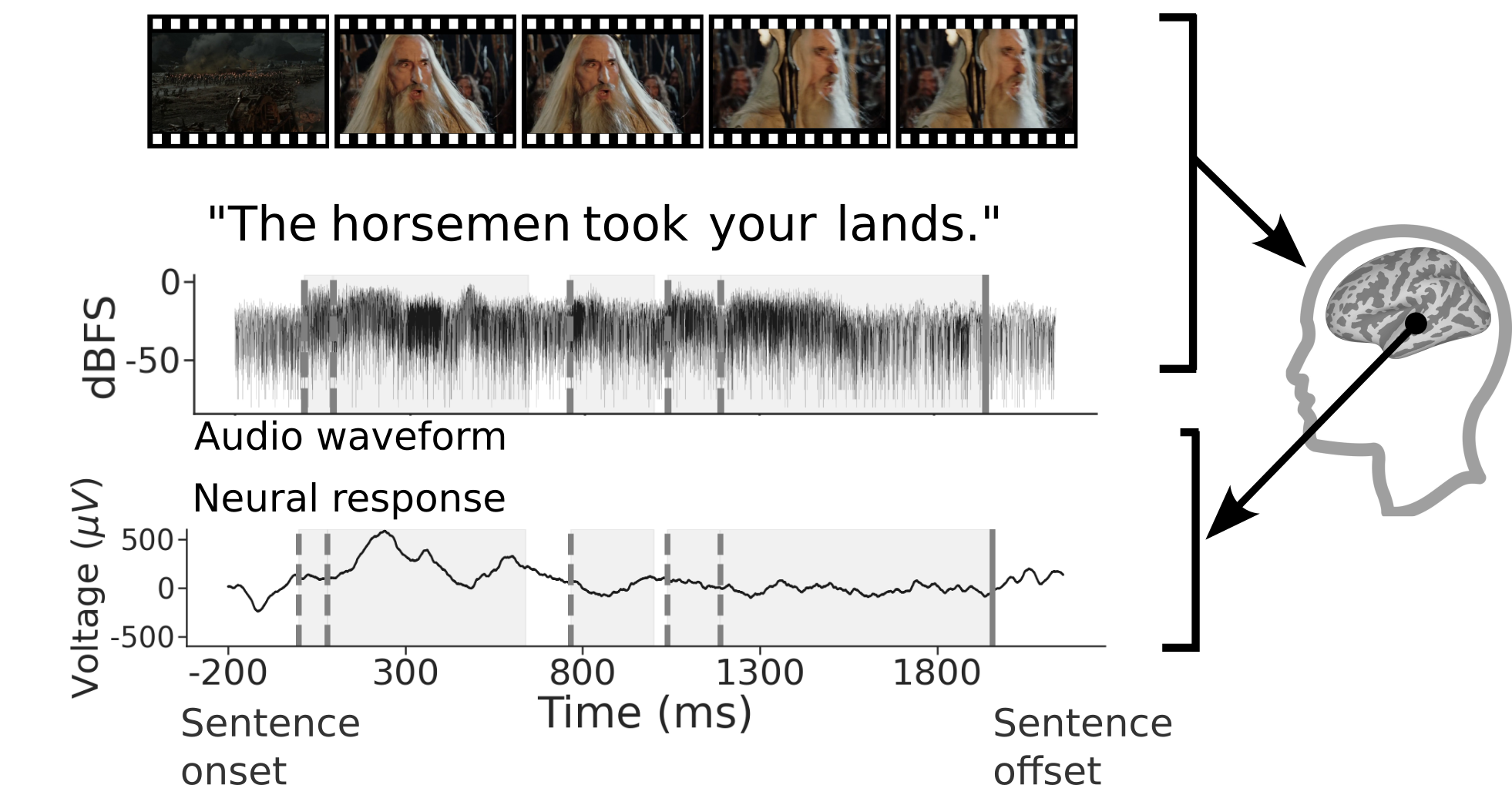

Wang et al. Brain treebank: Large-scale intracranial recordings from naturalistic language stimuli. NeurIPS 2024. PDF Wang et al introduce a dataset of neurophysiological recordings from the human brain while participants watch commercial movies. The dataset is annotated for language studies with precise marking of the onset and identity of every word as well as other relevant annotations. The dataset comprises 36,000 sentences and 205,000 words. |

|

Subramaniam et al Revealing Vision-Language Integration in the Brain with Multimodal Networks. International Conference on Machine Learning (ICML) 2024. PDF Vision and language signals must be put together to build complex cognitive concepts that link sensory inputs with internal representations. In this work, the authors use multimodal deep neural networks to identify neural signals that underlie vision-language integration in the human brain. |

|

Madan et al. Improving generalization by mimicking the human visual diet. bioRxiv 2024. PDF Generalizing to out-of-distribution samples remains a central challenge for Artificial Intelligence algorithms. In this study, Madan and colleagues propose that a central path toward improving the ability to generalize emerges from training neural networks using training data that better resembles how humans and other animals learn about their visual worlds. |

|

Talbot et al. Tuned compositional feature replays for efficient stream learning. IEEE Transactions on Neural Networks and Learning Systems 2023. PDF This study proposes a new algorithm to avoid catastrophic forgetting in stream learning settings. The model efficiently consolidates previous knowledge with a limited number of hypotheses in an augmented memory and replays relevant hypotheses. The algorithm performs comparably well or better than state-of-the-art methods, while offering more efficient memory usage. |

|

Wang et al. BrainBERT: Self-supervised representation learning for intracranial electrodes. ICLR 2023. PDF Wang et al introduce a Transfomer model to decode neural signals derived from intracranial field potential recordings. By initially learning data features in an unsupervised fashion, the algorithm yields higher classification performance and requires less data than traditional decoding methods. As a test case, this study applies this algorithm to investigate the representation of language signals during movies. |

|

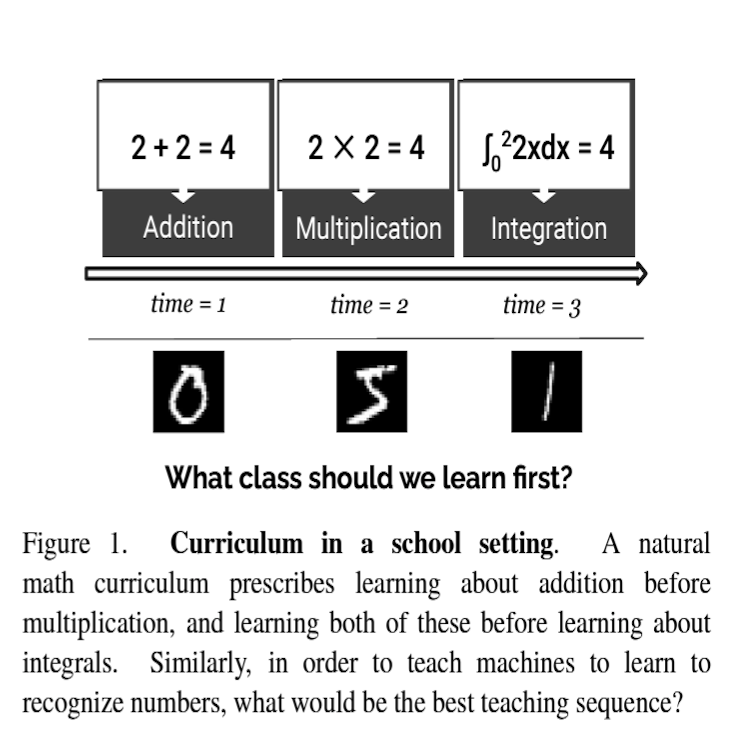

Singh et al. Learning to learn: how to continuously teach humans and machines. International Conference on Computer Vision (ICCV) 2023. PDF In the same way that we progress from addition to subtraction to multiplication to division when teaching math, the curriculum can have a profound impact on how machines learn. This study compares curriculum learning in humans and machines. The authors propose an algorithm to discover good curricula leading to better performance in visual recognition tasks. |

|

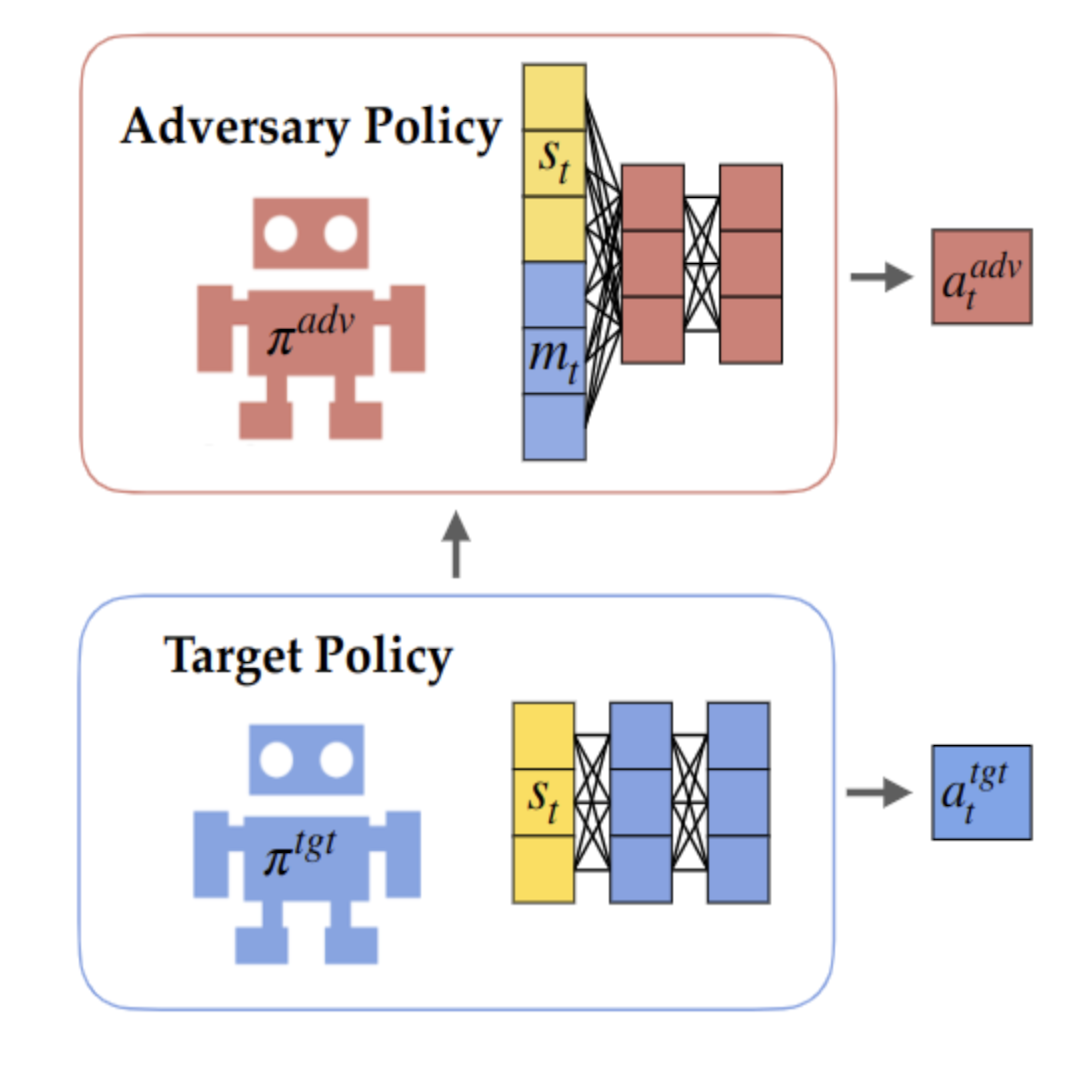

Casper et al. White-box adversarial policies against RL agents. arXiv 2023 . PDF We study white-box adversarial attacks for deep reinforcement learning (RL) agents. We show that having access to a target's internal state can be useful for identifying its vulnerabilities. We introduce white-box adversarial policies where an attacker observes both a target's internal state and the world state in 2-player games and also in text-generating language models. We demonstrate that these policies achieve higher initial and asymptotic performance against a target agent compared to black box controls. |

|

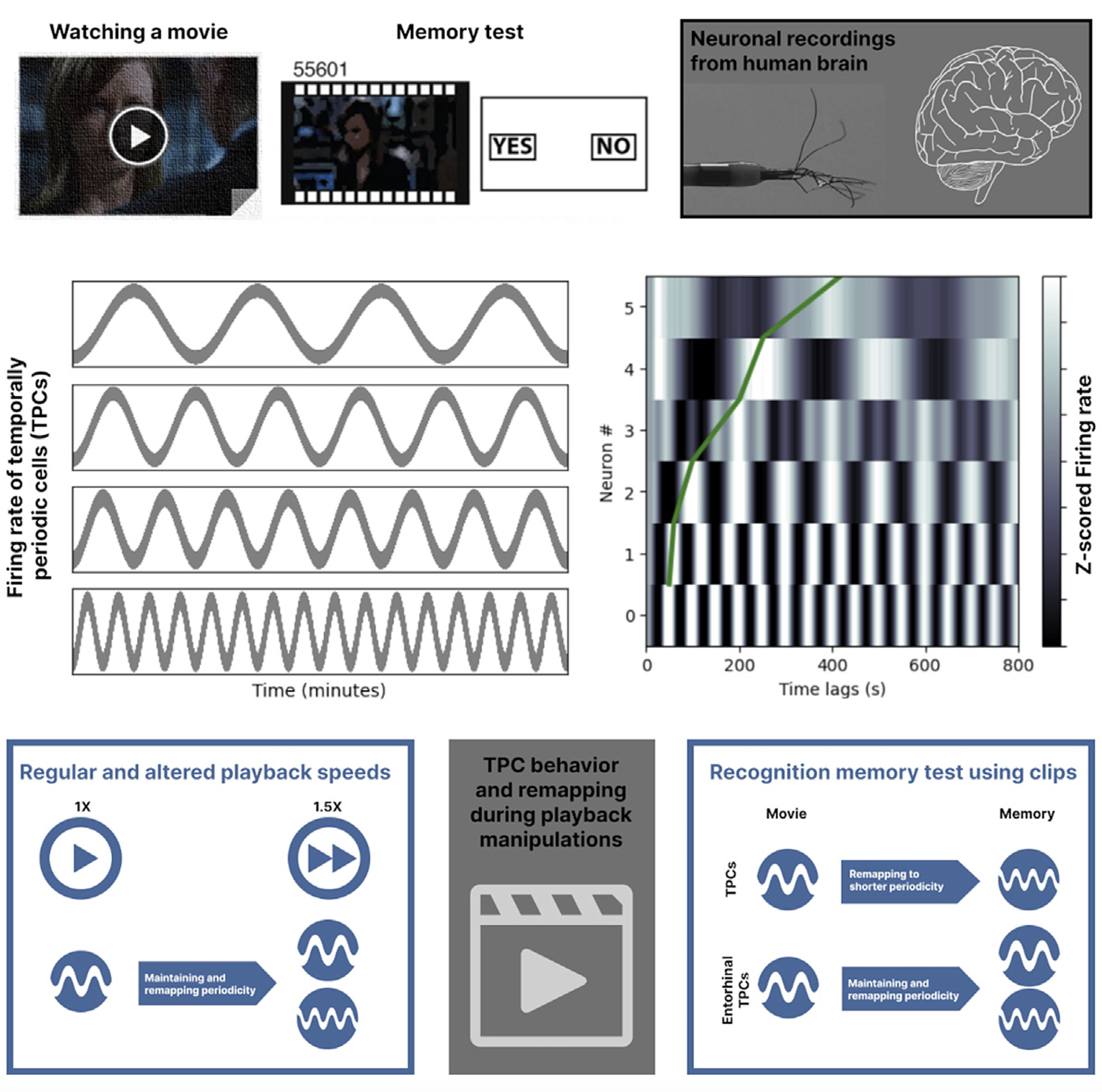

Aghajan et al. Minute-scale periodicity of neuronal firing in the human entorhinal cortex. Cell Reports 2023 . PDF This study demonstrates that neurons in the human medial temporal lobe, particularly those in the entorhinal cortex, show minute-scale periodic firing activity while participants watch a movie. Different neurons maintain or remap their periodicity when the movie playback speed is altered. These response patterns complement the spatial periodicity described in the entorhinal cortex and may jointly specify a map of space and time. |

|

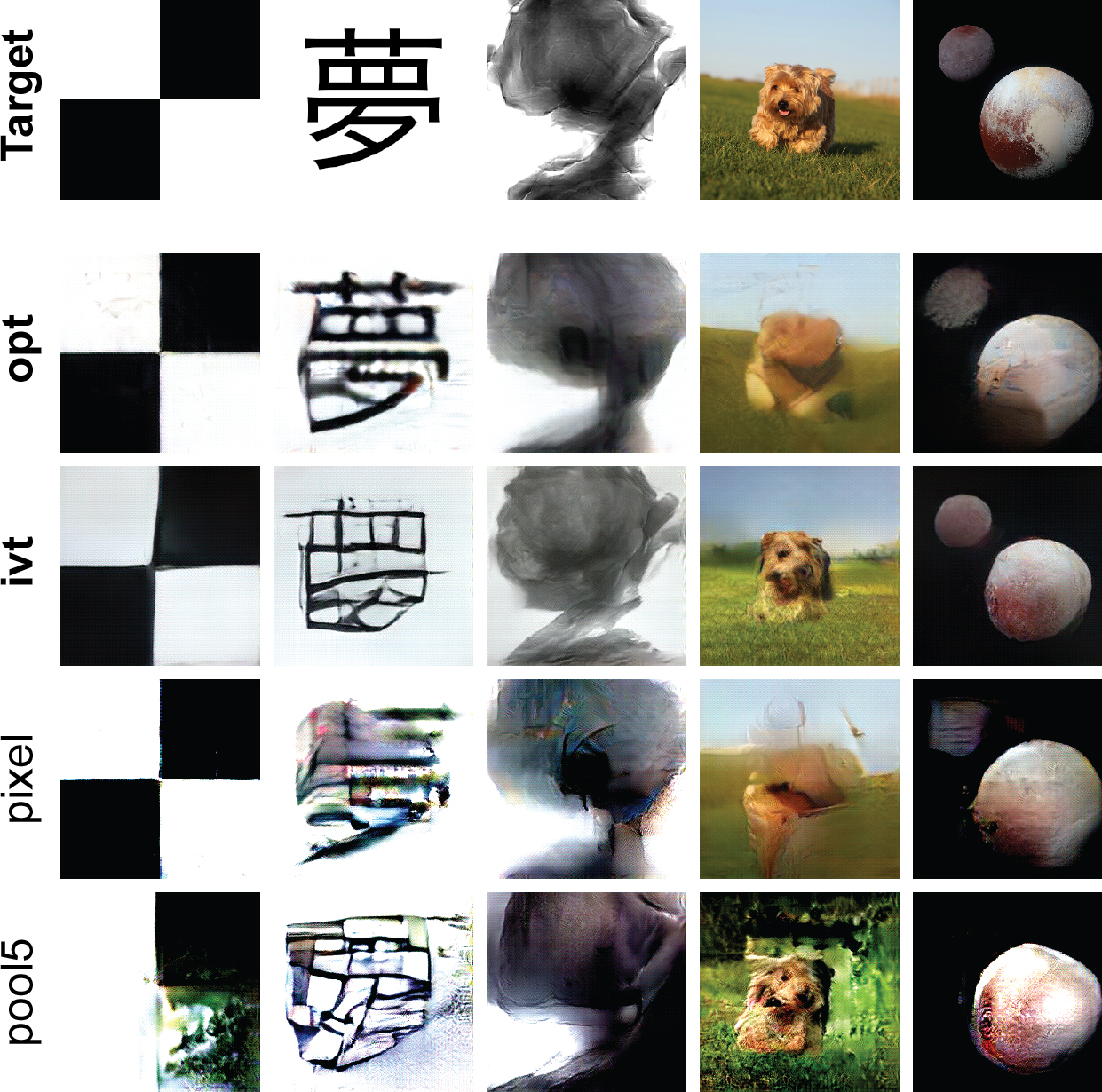

Casper et al. Robust feature-level adversaries are interpretability tools. NeurIPS 2022. PDF

This work introduces targeted, disguised, physically realizable, black-box, and universal adversarial attacks. The methodology shows that adversarial attacks can help better understand representations in machine learning and also illustrates how adversarial attacks have been discovered by biology in natural settings. |

|

Bardon et al. Face neurons encode non-semantic features. PNAS 2022. PDF

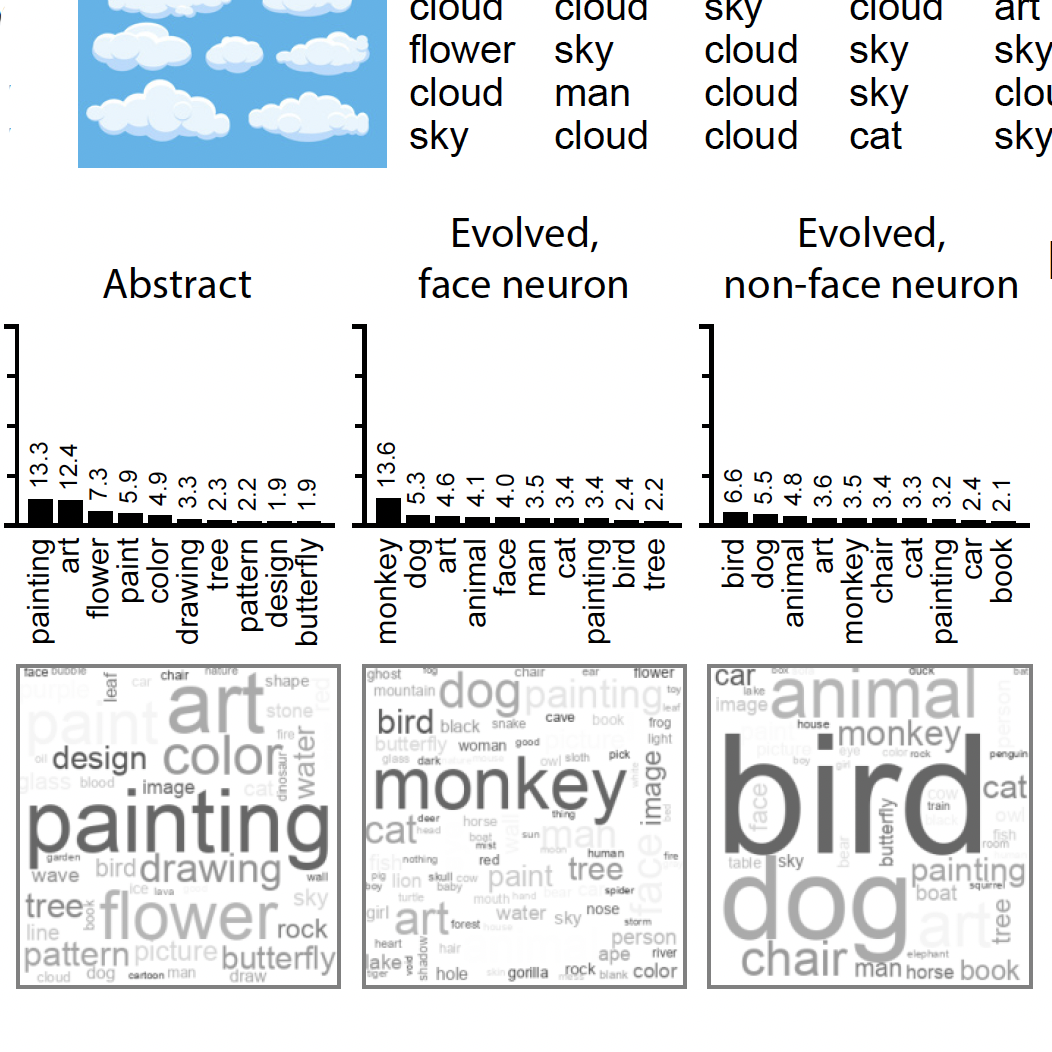

Bardon et al. Face neurons encode non-semantic features. PNAS 2022. PDF There has been extensive discussion about neurons that respond more strongly to faces than to other objects like houses or chairs. Here we show that so-called face neurons respond to complex visual features that are present in faces but also in many other objects. We conclude that these neurons do not encode semantic information about faces. |

|

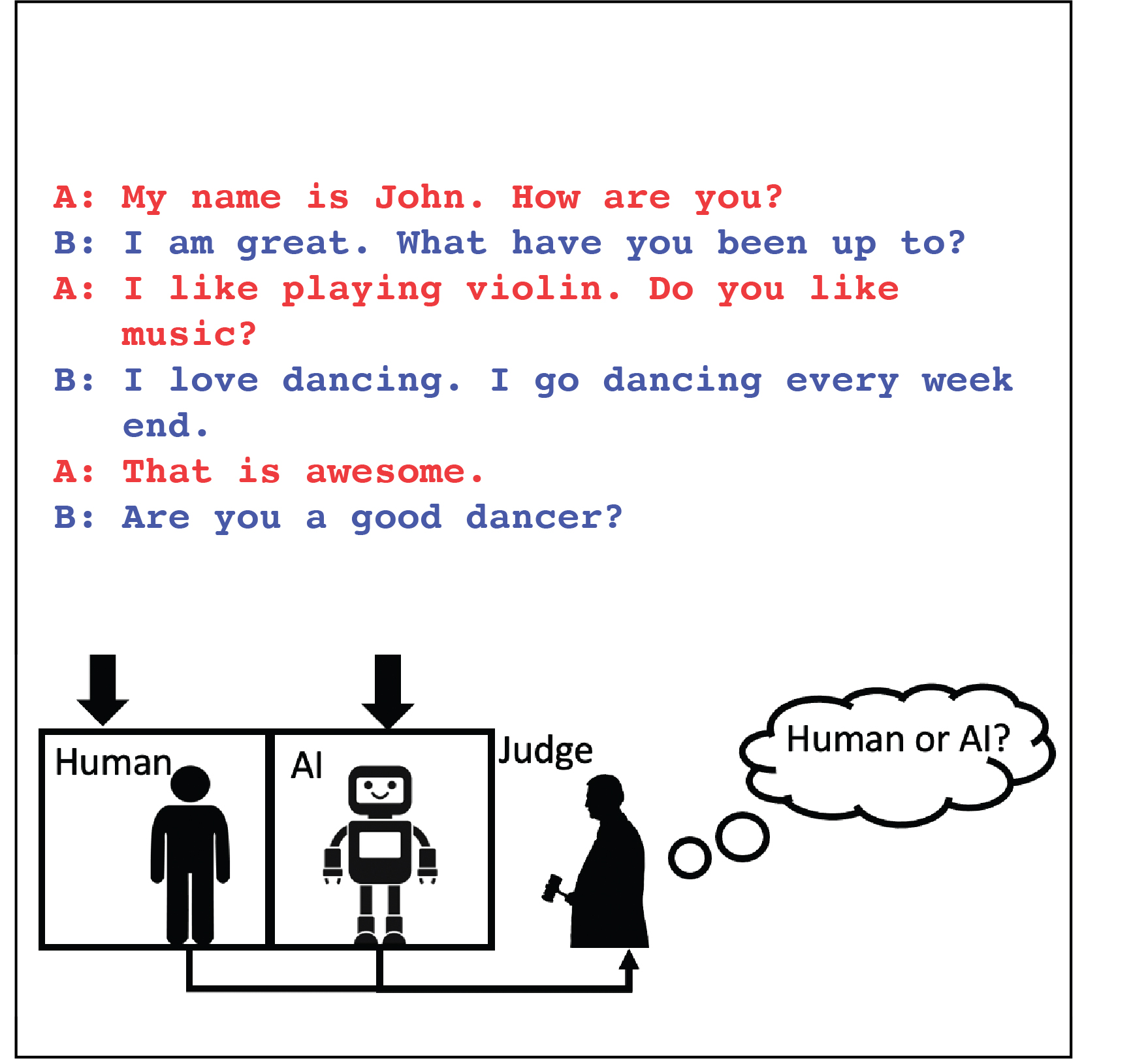

Zhang et al. Human or Machine? Turing Tests for Vision and Language. arXiv 2022. PDF

As AI algorithms become increasingly better at multiple tasks, there are many real-world problems where it is imperative to understand whether an agent is human or not. Here we perform extensive Turing tests for vision and language tasks and characterize the state-of-the-art in how well machines can imitate humans. |

|

Sanchez Lopezet al. How to make a match: Neural representation of memories in the human brain during a memory card game. Submitted. PDF coming soon

Here we investigate the mechanisms of memory formation during a realistic game that involves matching images. Invasive neurophysiological recordings from the human brain reveal the spatiotemporal dynamics underlying the processes of encoding, retrieval and matching. |

|

Zhang et al. Look Twice: A Generalist Computational Model Predicts Return Fixations across Tasks and Species.. PLoS Computational Biology 2022. PDF

We move our eyes several times a second, bringing the center of gaze into focus and high resolution. While we typically assume that we can rapidly recognize the contents at each fixation, recent observations cast a doubt on this assumption. Instead, we show that monkeys and humans frequently return to previously visited locations. Zhang and colleagues provide a computational model that can capture these eye movements and return fixations across a wide variety of tasks and conditions. |

|

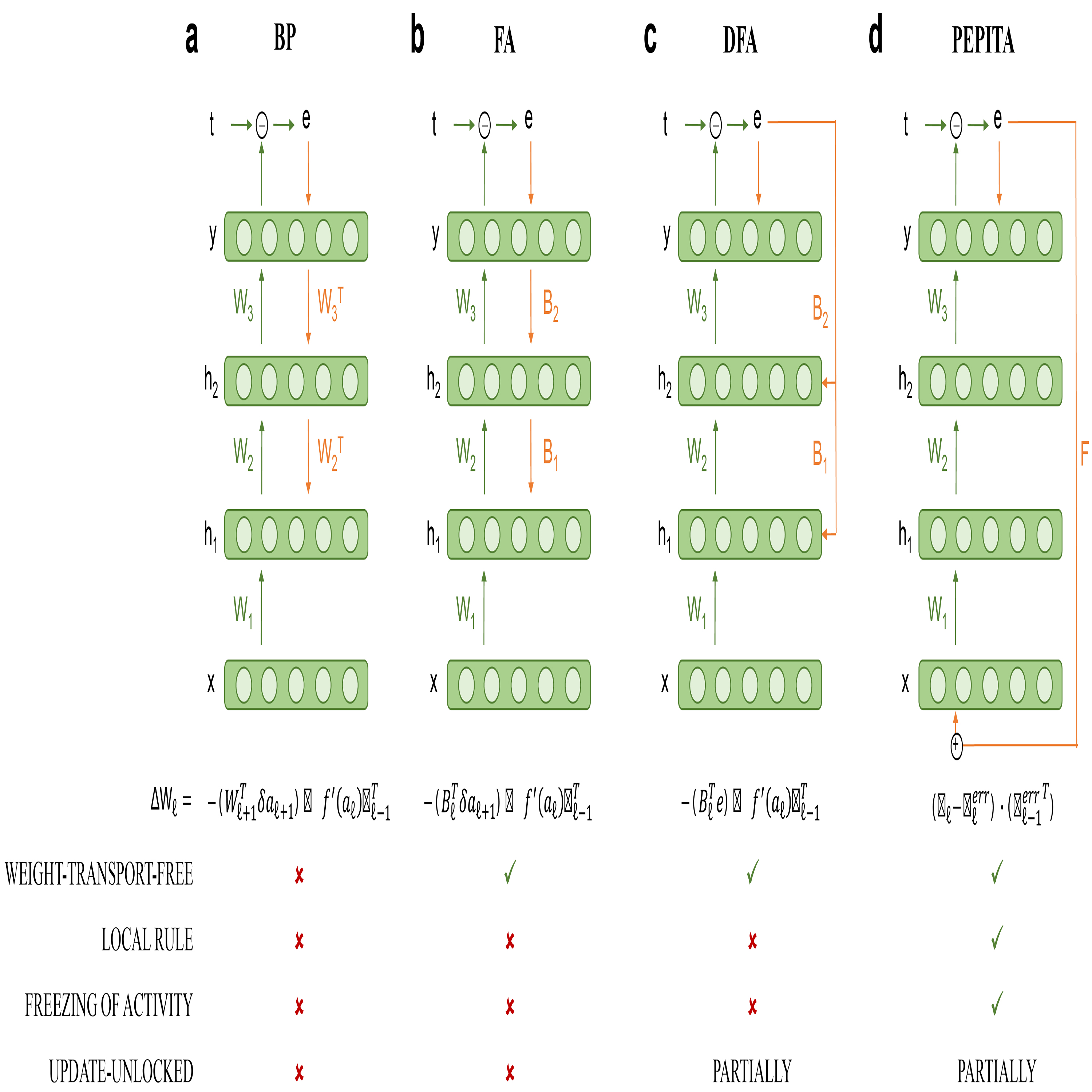

Dellaferrera et al. Error-driven input modulation: Solving the credit assignment problem without a backward pass. ICML 2022. PDF

Supervised learning in artificial neural networks typically relies on backpropagation (BP). Here we replace the backward pass with a second forward pass in which the input signal is modulated by the network error. This novel learning rule addresses key concerns about the plausibility of BP and can be applied to both fully connected and convolutional models. These results help incorporate biological principles into machine learning. |

|

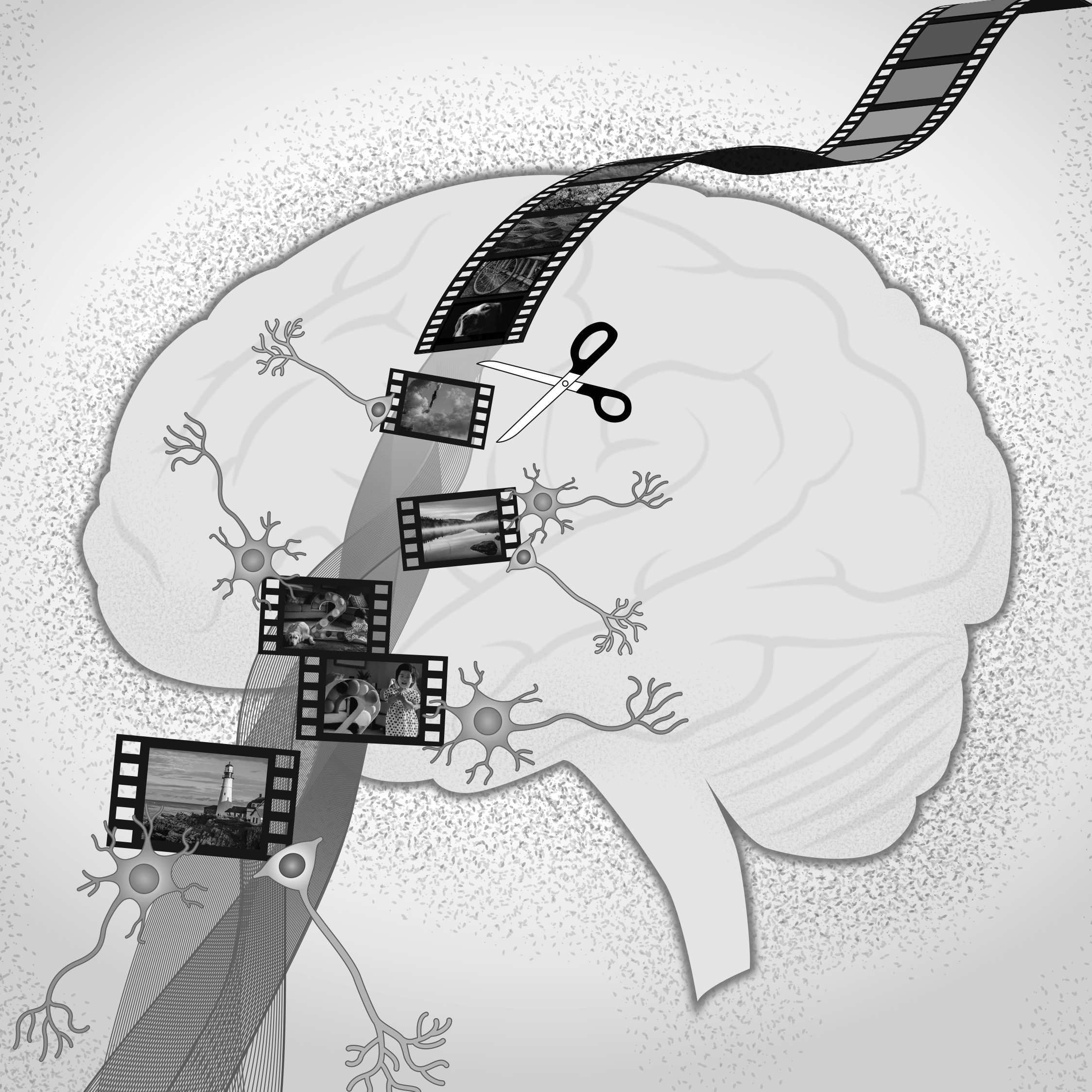

Zheng et al. Neurons detect cognitive boundaries to structure episodic memories in humans. Nature Neuroscience 2022. PDF

While experience is continuous, memories are organized as discrete events. Cognitive boundaries are thought to segment experience and structure memory, but how this process is implemented remains unclear. Here we show that neurons responded to abstract cognitive boundaries between different episodes. |

|

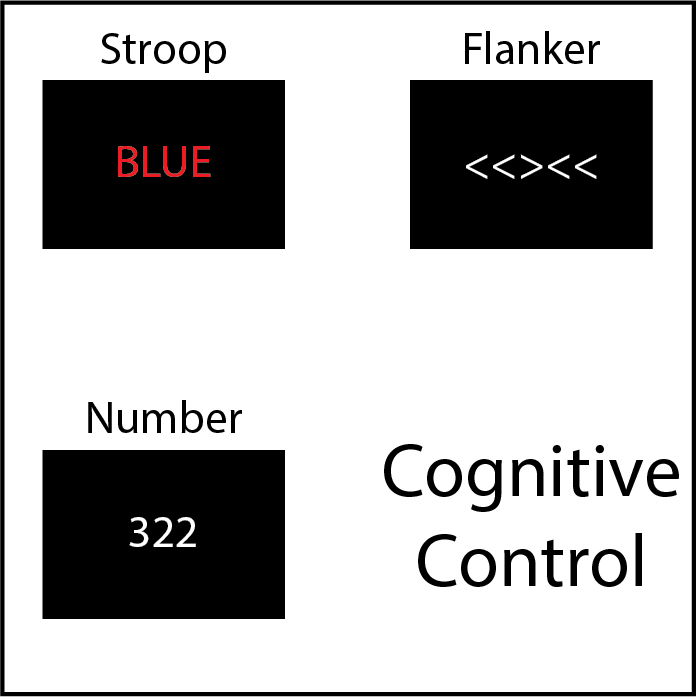

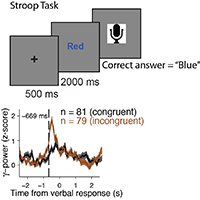

Xiao et al. Cross-Task Specificity and Within-Task Invariance of Cognitive Control Processes. Cell Reports 2022. PDF

Everyday decisions require integrating sensory cues and task demands while resolving conflict in goals and cues (e.g., “should I go for a run or stay home and watch a movie?”). Theories of cognitive control posit universal mechanisms of conflict resolution. The results of the current study challenge this dogma by demonstrating that the neural circuits involved in cognitive control are specific to the combination of inputs and outputs in each task. |

|

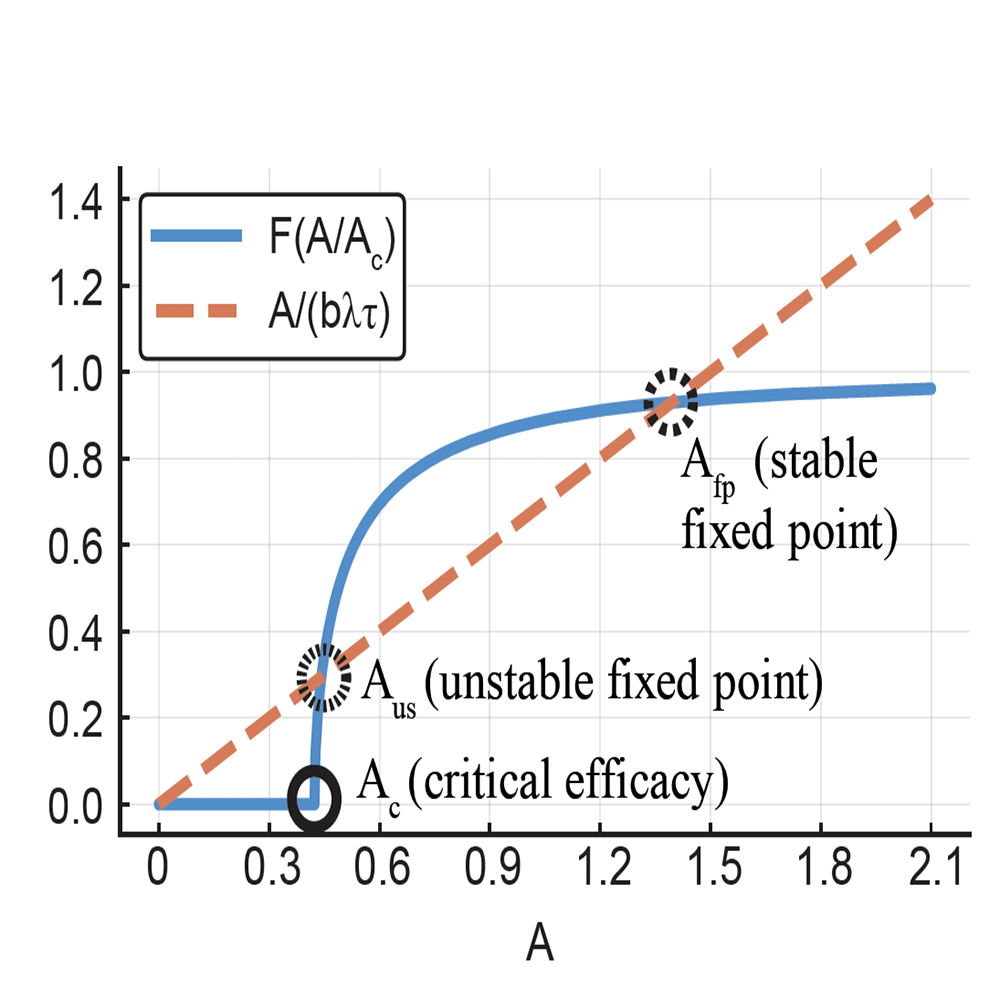

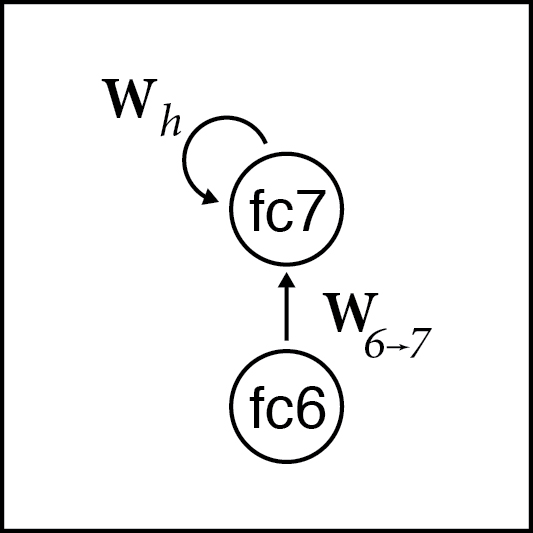

Shaham et al. Stochastic consolidation of lifelong memory. Scientific Reports 2022. PDF This study introduces a model for continual learning inspired by an attractor-based recurrent neural network that combines Hebbian plasticity, forgetting via synaptic decay, and a replay-based consolidation mechanism. |

|

Wang et al. Mesoscopic functional interactions in the human brain reveal small-world properties. Cell Reports 2021. PDF

Cognition relies on rapid and robust communication between brain areas. Wang et al. leverage multi-day intracranial field potential recordings to characterize the human mesoscopic functional interactome. The methods are validated using monkey anatomical and physiological data. The human interactome reveals small-world properties and is modulated by sleep versus awake state. |

Gupta et al. Visual Search Asymmetry: Deep Nets and Humans Share Similar Inherent Biases. NeurIPS 2021. PDF

Despite major progress in development of artificial vision systems, humans still outperform computers in most complex visual tasks. To gain better understanding of the similarities and differences between biological and computer vision, here we examined the enigmatic asymmetries in visual search. Sometimes, humans find it much easier to find a certain object A among distractors B than the reverse. Here Gupta et al. demonstrate that an eccentricity-dependent model of visual search can capture these forms of asymmetries and that these asymmetries are dependent on the diet of visual inputs that the networks see during training. |

|

|

Bomatter et al. When Pigs Fly: Contextual Reasoning in Synthetic and Natural Scenes. International Conference on Computer Vision (ICCV) 2021. PDF Scene understanding requires the integration of contextual cues. In this study, Bomatter et al. study contextual reasoning in humans and machines by creating a novel dataset consisting of synthetic images based on state-of-the-art computer graphics. In this new dataset, they use violations on contextual common sense rules to investigate the impact on visual recognition. Furthermore, the authors propose a new neural network architecture that provides an approximation to human behavior in rapid contextual reasoning tasks. |

|

Zhang et al. Putting visual recognition in context. CVPR 2020. PDF This study systematically investigates where, when, and how contextual information modulates visual object recognition. The work introduces a computational model (CATNet, context-aware two-stream network) that approximates human visual behavior in the incorporation of contextual cues for visual recognition. |

|

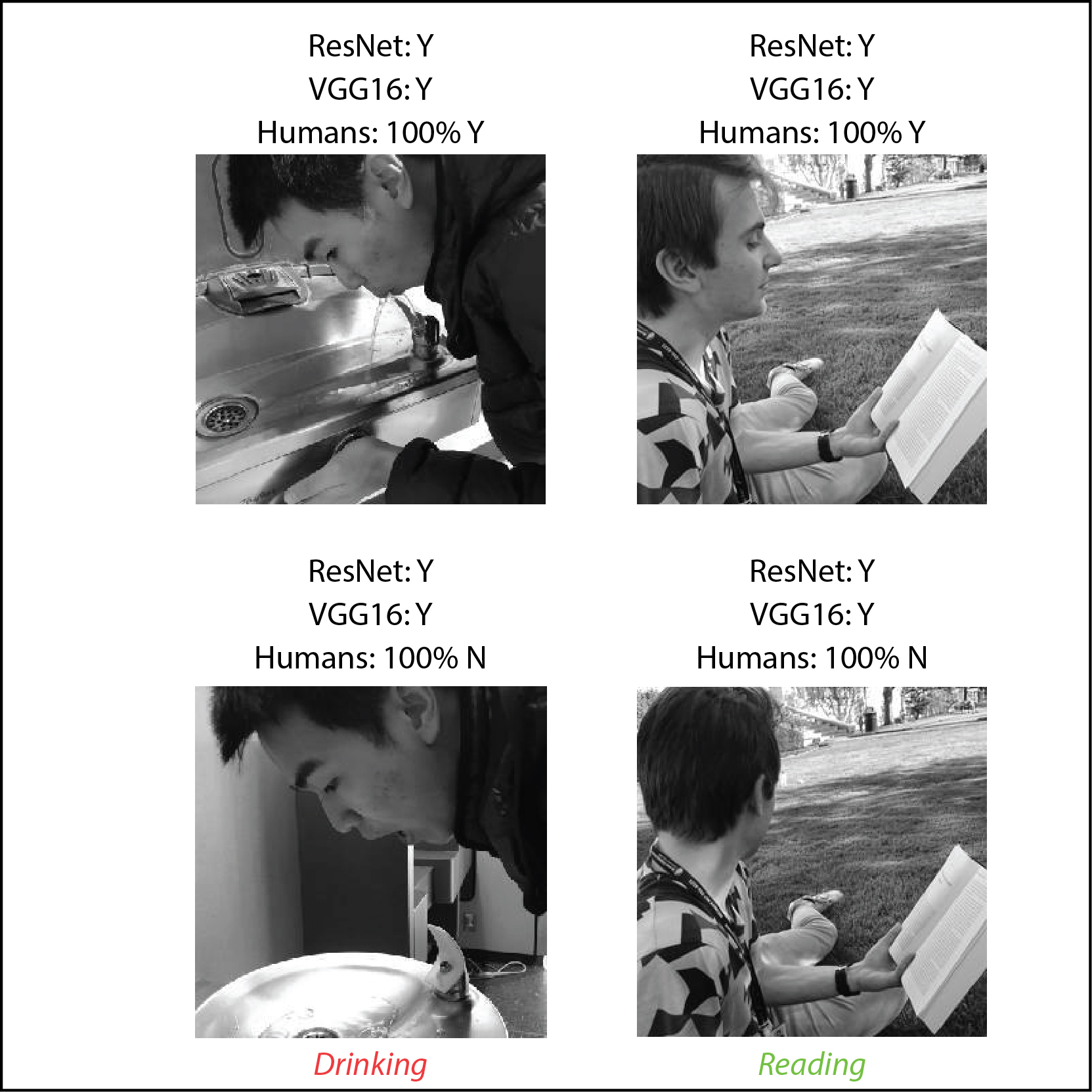

Jacquot et al. Can deep learning recognize subtle human activities?CVPR 2020. PDF Success in many computer vision efforts capitalizes on confounding factors and biases introduced by poorly controlled datasets. Here we introduce a procedure to create more controlled datasets, and we exemplify the process by creating a challenging dataset to study recognition of everyday actions. |

|

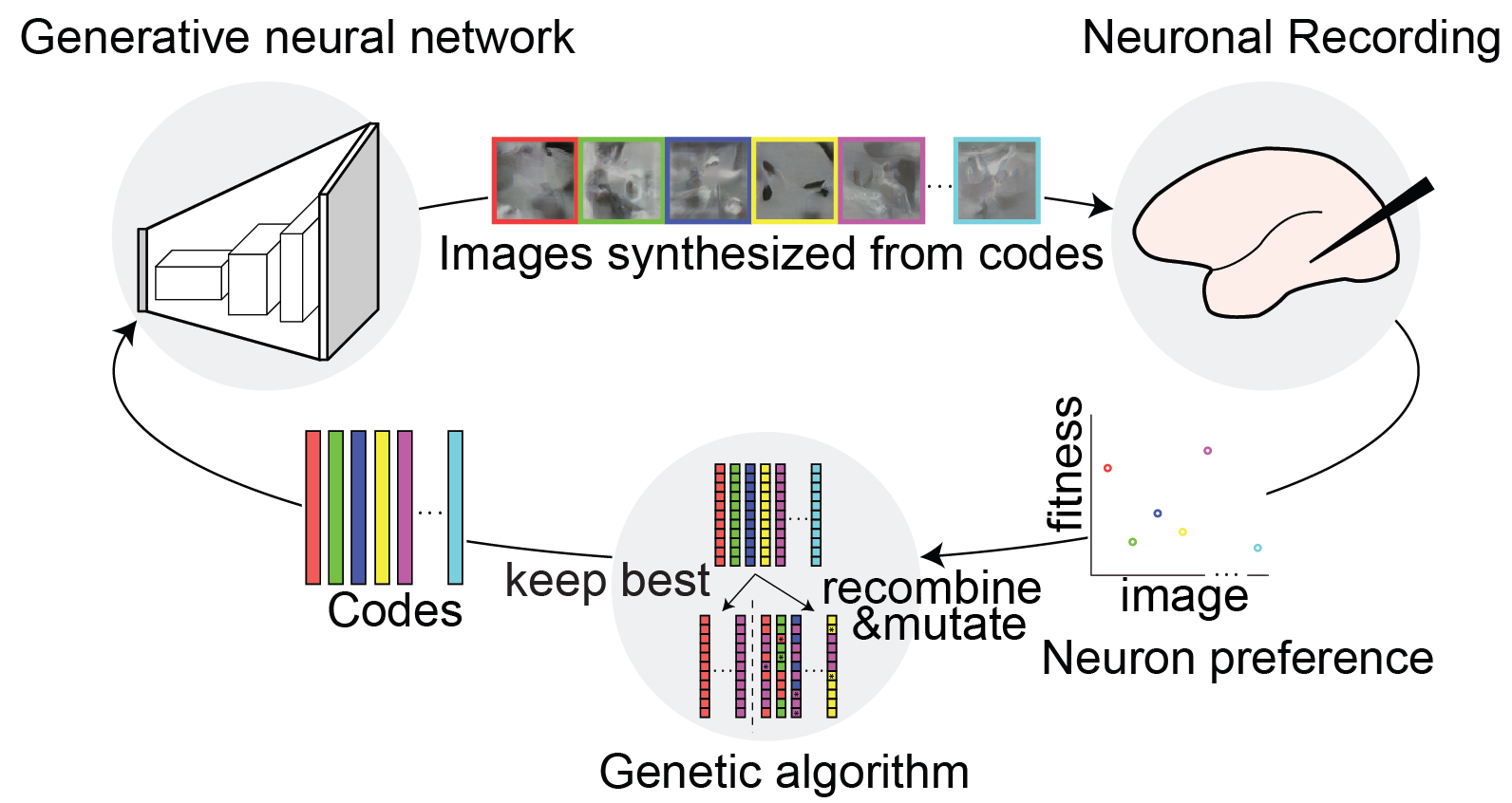

Xiao et al. Finding Preferred Stimuli for Visual Neurons Using Generative Networks and Gradient-Free Optimization. PLoS Computational Biology 2020. PDF This study introduces the XDream algorithm to find preferred stimuli for neurons in an unbiased manner. The study shows the robustness of XDream to different architectures, generators, developmental regimes, and noise. |

|

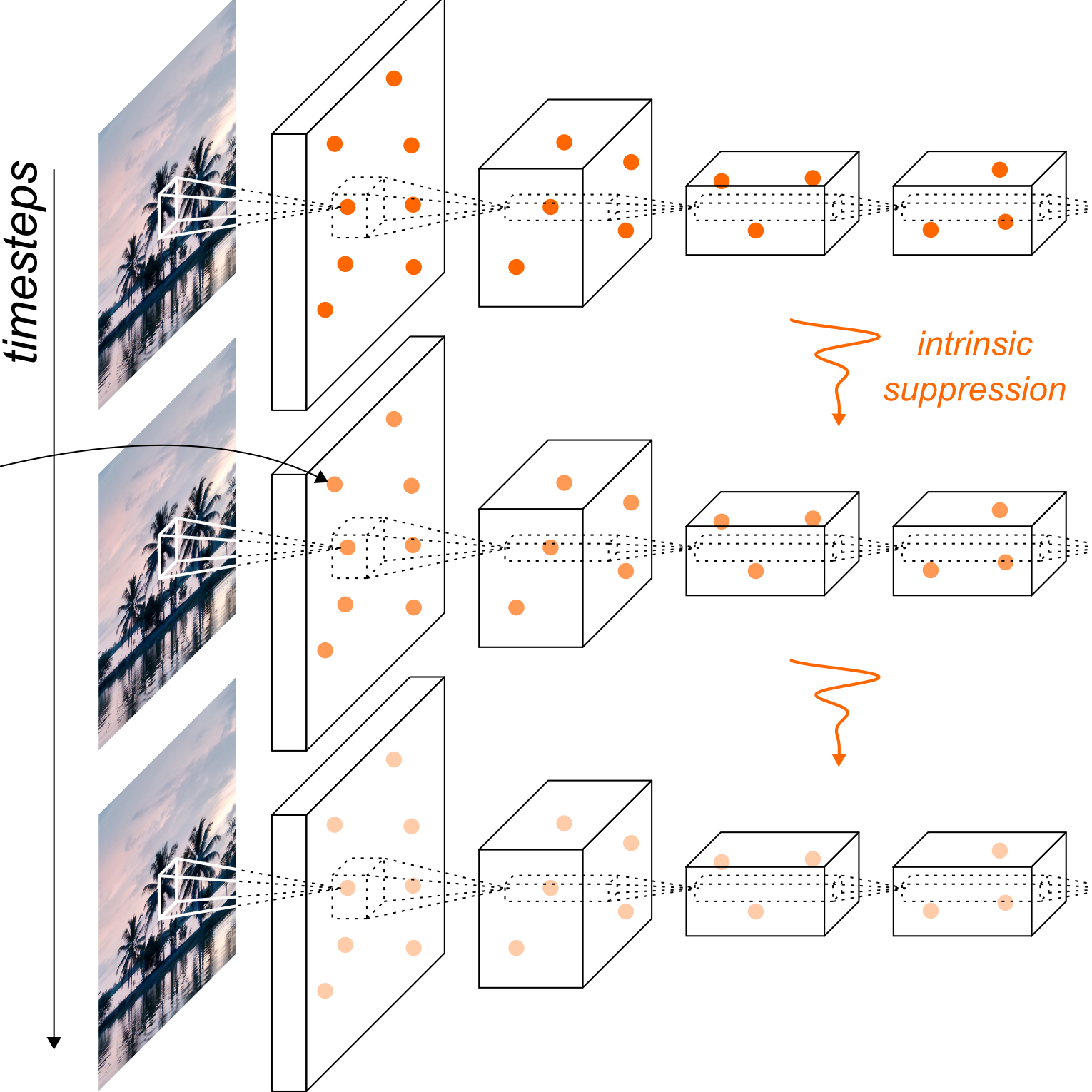

Vinken et al. Incorporating intrinsic suppression in deep neural networks captures dynamics of adaptation in neurophysiology and perception. Science Advances 2020 PDF This study introduces a computational model of adaptation in visual cortex. The model relies exclusively on activity-dependent neuronally-intrinsic mechanisms. The deep convolutional neural network architecture can explain a plethora of observations both at the perceptual levels and neurophysiological levels. |

|

Ben-Yosef et al. Minimal videos: Trade-off between spatial and temporal information in human and machine vision. Cognition 2020. PDF This study investigates the role of spatiotemporal integration in visual recognition. We introduce "minimal videos", which can be readily recognized by humans but become unrecognizable by a small reduction in the amount of either spatial or temporal information. The stimuli and behavioral results presented here challenge state-of-the-art computer vision models of action recognition. |

Zhang et al. What Am I Searching For? EPIC Workshop, CVPR 2020. PDF Can we infer intentions and goals from a person's actions? As an example of this family of problems, we consider here whether it is possible to decipher what a person is searching for by decoding their eye movement behavior and predicting their behavior using a computational model. | |

|

Ponce et al. Evolving Images for Visual Neurons Using a Deep Generative Network Reveals Coding Principles and Neuronal Preferences. Cell 2019. PDF This study introduces a new algorithm to discover neural tuning properties in visual cortex. The method combines a deep generative network and a genetic algorithm to search for images that elicit high firing rates in real-time in an unbiased manner. The results of applying this algorithm to macaque V1 and IT neurons challenge existing dogmas about how neurons in ventral visual cortex represent information. |

|

Zhang et al. Lift-the-flap: what, where and when for context reasoning. arXiv 1902.00163. PDF This study shows that it is possible to infer the identity of an object purely from contextual cues, without any information about the object itself. The study proposes a computational model of contextual reasoning inference. |

Madhavan et al. Neural Interactions Underlying Visuomotor Associations in the Human Brain. Cerebral Cortex, 2019. PDF This study uncovers plausible neural mechanisms instantiating reinforcement learning rules to associate visual and motor actions by trial-and-error learning via interactions between frontal regions and visual cortex as well as between frontal cortex and motor cortex. |

|

|

Kreiman. What do neurons really want? The role of semantics in cortical representations Psychology of Learning and Motivation 2019. PDF This chapter discusses how the field has investigated the neural code for visual features along ventral cortex, how computational models should be used to define neuronal turning preferences and how to think about the role of semantics in the representation of visual information. |

|

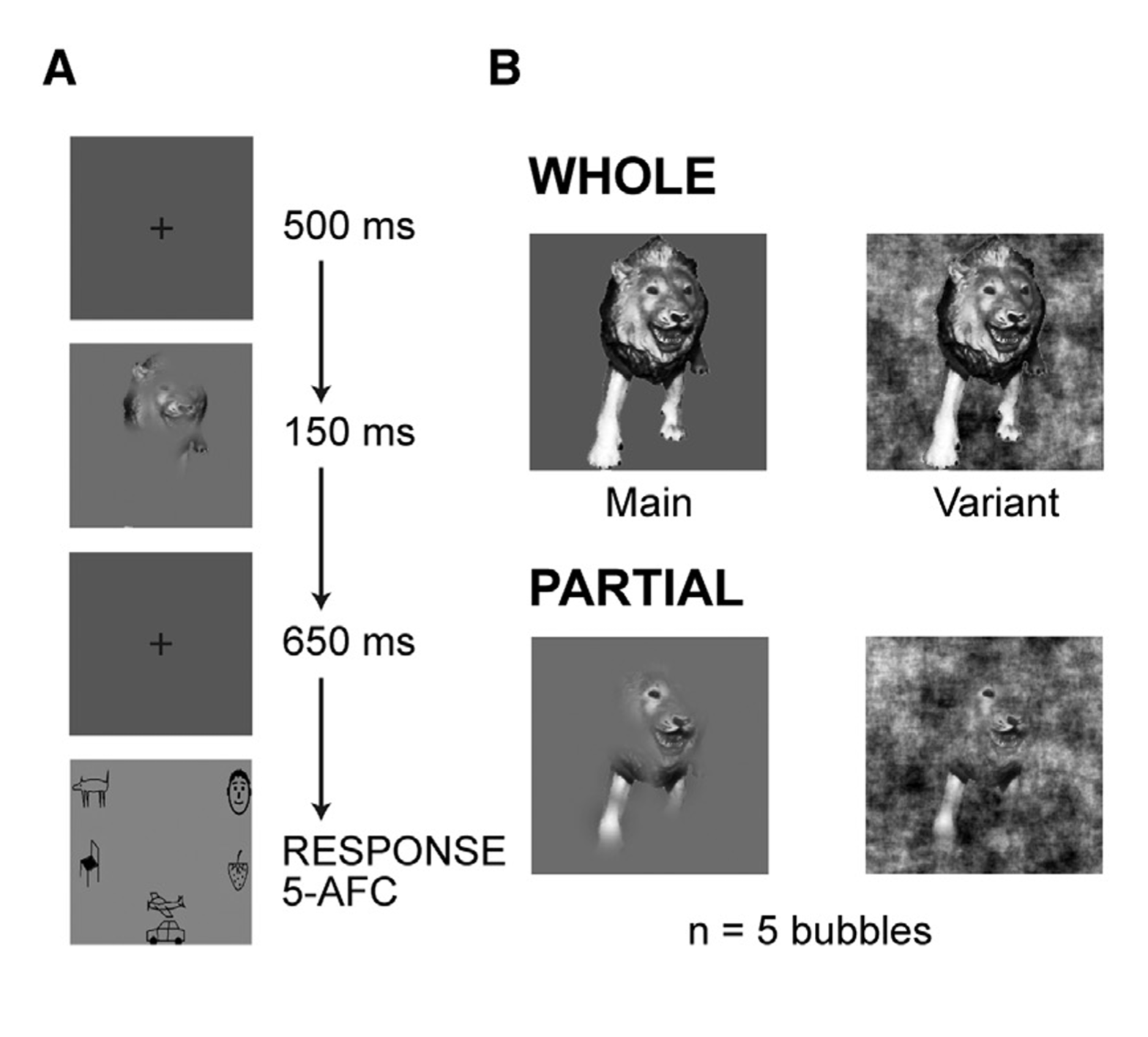

Tang et al. Recurrent computations for visual pattern completion. PNAS 2018. PDF

How can we make inferences from partial information? This study combines behavioral, neurophysiological and computational tools to show that recurrent computations can help perform visual pattern completion. |

|

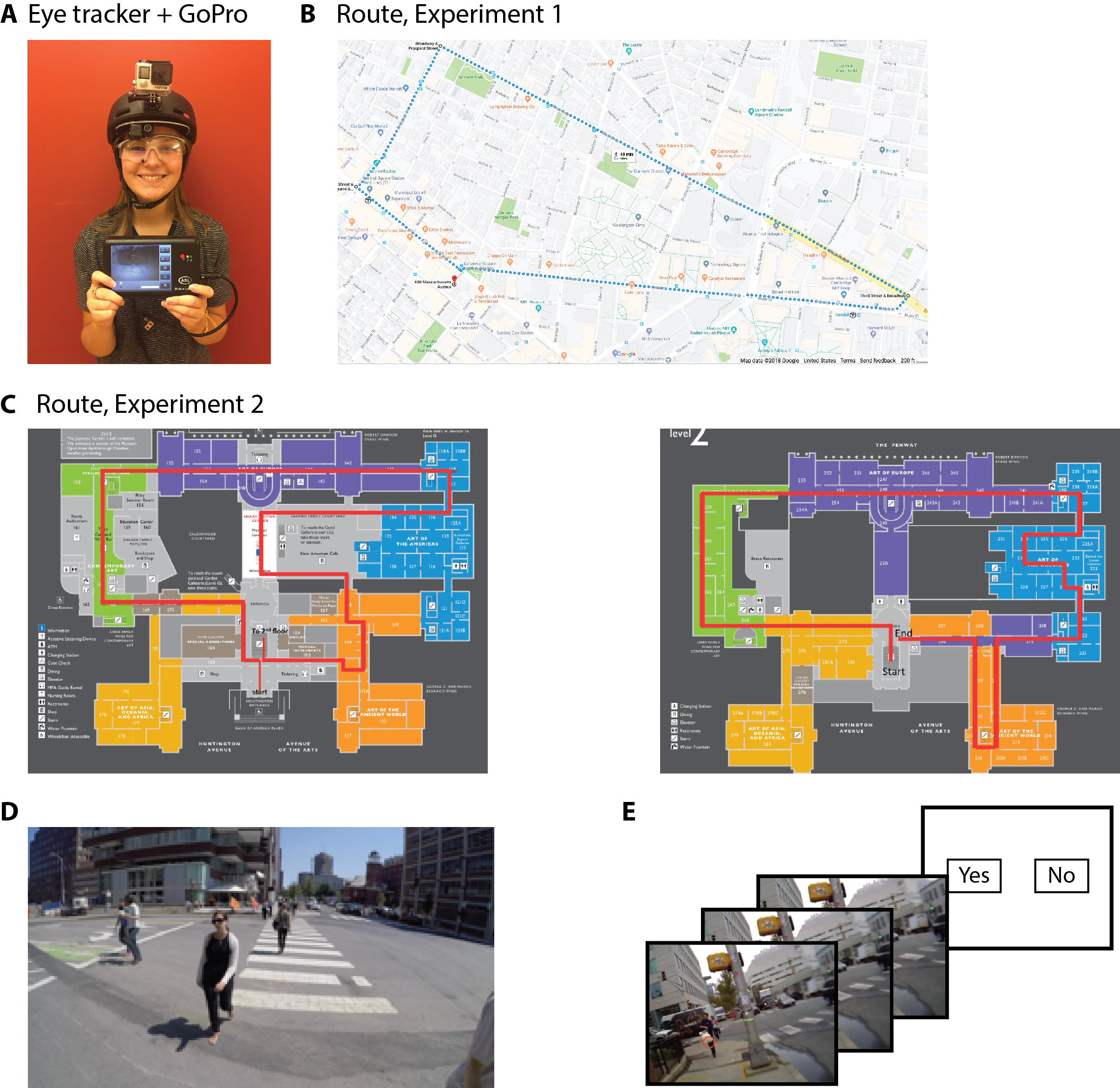

Misra et al. Minimal memory for details in real life events. Scientific Reports 2018. PDF

This study scrutinizes one hour of real life events and shows that humans tend to forget the vast majority of the details. Only a small fraction of events is crystallized in the form of episodic memories. |

Zhang et al. Finding any Waldo: zero-shot invariant and efficient visual search. Nature Communications 2018. PDF

This study demonstrates that humans can perform invariant and efficient visual search and introduces a biologically inspired computational model capable of performing zero-shot invariant visual search in complex natural scenes. |

|

|

Wu et al, Learning scene gist with convolutional neural networks to improve object recognition. IEEE CISS 2018

A deep convolutional architecture with two sub-networks, a fovea and a periphery, to integrate spatial contextual information for visual recognition. |

|

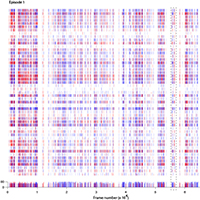

Isik et al. What is changing when: Decoding visual information in movies from human intracranial recordings. Neuroimage 2017. PDF

Detection of temporal transitions directly from field potentials along ventral visual cortex. |

|

Olson et al. Simple learning rules generate complex canonical circuits

This study demonstrates that it is possible to develop a network that resembles the canonical circuit architecture in neocortex starting from a tabula rasa network and implementing simple spike-timing dependent plasticity rules. |

|

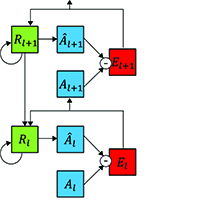

Lotter et al. Deep predictive coding networks for video prediction and unsupervised learning. International Conferences on Learning Representations (ICLR) 2017 PDF

A deep model including bottom-up and top-down connections to make predictions in video sequences. |

|

Tang et al. Predicting episodic memory formation for movie events. Scientific Reports 2016. PDF

Machine learning approach to predict whether specific events within a movie will be remembered or not. |

Miconi et al. There's Waldo! A Normalization Model of Visual Search Predicts Single-Trial Human Fixations in an Object Search Task. Cerebral Cortex 2016. PDF

This work presents a biologically inspired computational model for visual search. | |

|

Tang et al. Cascade of neural processing orchestrates cognitive control in human frontal cortex. eLife 2016. PDF

A dynamic and hierarchical sequence of steps in human frontal cortex orchestrates cognitive control. |

|

Tang et al. Spatiotemporal dynamics underlying object completion in human ventral visual cortex. Neuron 2014. PDF

We often encounter partially visible objects in the real world due to poor illumination, noise, or occlusion. Even under conditions of highly reduced visibility the primate visual system is capable of performing pattern completion to robustly recognize those objects. In this study, Tang et al provide initial glimpses into the neural circuits along the ventral visual cortex in the human brain responsible for pattern completion and recognition of heavily occluded objects. |

|

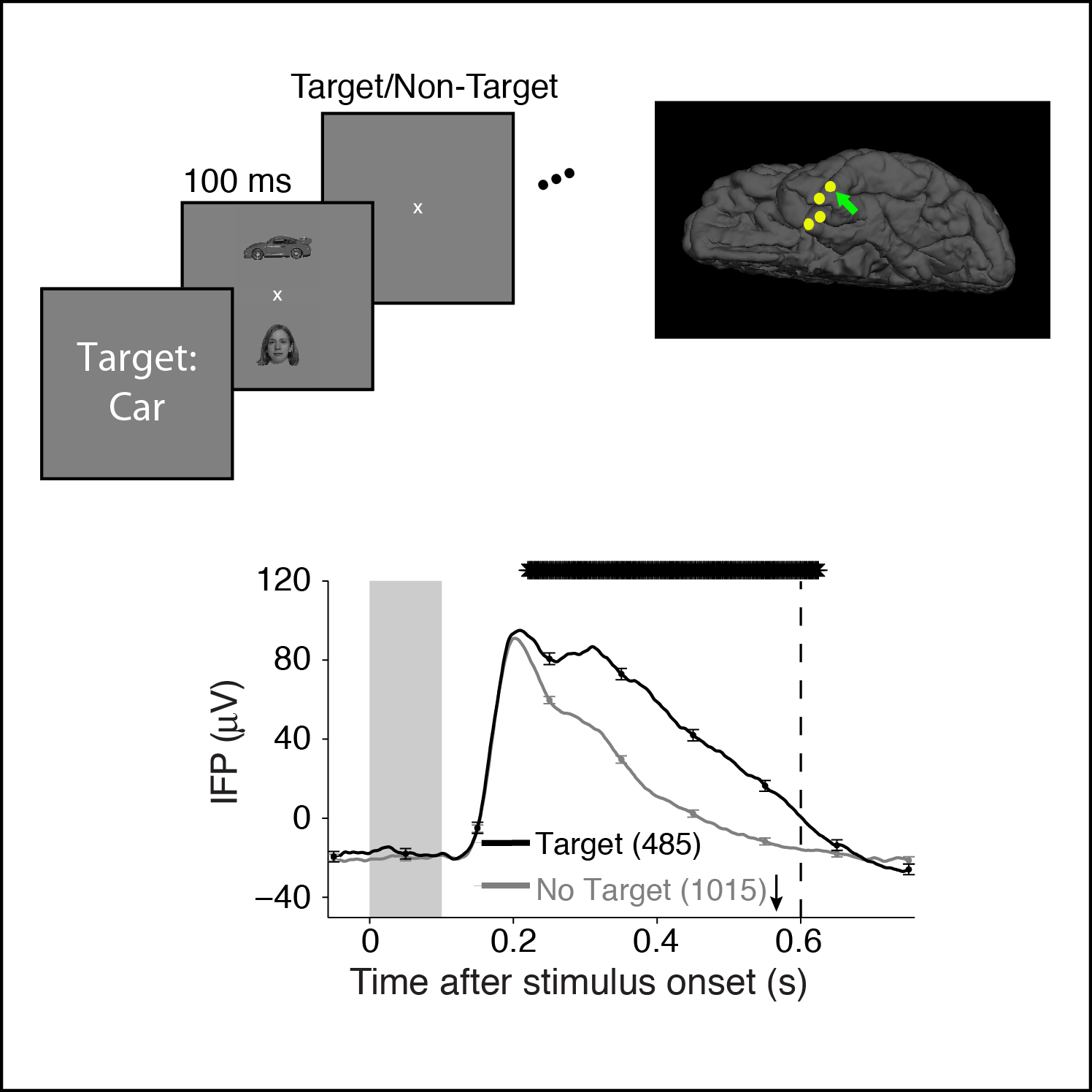

Bansal et al. Neural dynamics underlying target detection in the human brain. Journal of Neuroscience 2014. PDF

Feature-based attention modulates responses along the human ventral visual stream during a target detection task. |

Singer and Kreiman. Asynchrony disrupts object recognition. Journal of Vision 2014. PDF

Spatiotemporal integration during recognition breaks down with even small deviations from simultaneity. |

|

Hemberg et al. Integrated genome analysis suggests that most conserved non-coding sequences are regulatory factor binding sites. Nucleic Acids Research 2012. PDF

A method to build putative transcripts from high-throughput total RNA-seq data. (HATRIC) |

|

|

Kriegeskorte and Kreiman. Understanding visual population codes MIT PRESS 2011 Towards a common multivariate framework for cell recording and functional imaging. Link to code and other resources. |

|

Kim et al. Widespread transcription at thousands of enhancers during activity-dependent gene expression in neurons. Nature 2010. PDF

Discovery of transcription at enhancers, eRNAs. |

|

Agam et al.

Robust selectivity to two-object images in human visual cortex. Current Biology 2010. PDF

The physiological responses at the level of field potentials along ventral visual cortex show robustness to clutter. |

|

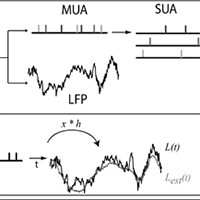

Rasch et al. From neurons to circuits: linear estimation of local field potentials. Journal of Neuroscience 2009. PDF

Computational model to investigate the relationship between spikes and local field potential signals. |

|

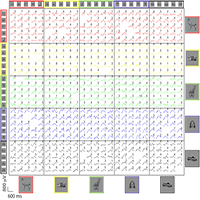

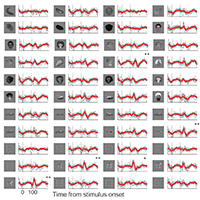

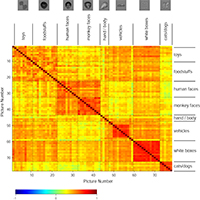

Meyers et al. Dynamic Population Coding of Category Information in ITC and PFC. Journal of Neurophysiology 2008. PDF

Meyers et al introduce a novel methodology to shed light on the dynamics of encoding of visual information by applying cross-time decoding of information. Applying this method, the authors show that neurons in inferior temporal cortex mostly encode image features whereas prefrontal cortex neurons represent task-dependent information. There is minimal extrapolation in decoding performance across time. |

Liu et al. Timing, timing, timing: Fast decoding of object inforrmation from intracranial field potentials in human visual cortex. Neuron 2009. PDF Rapid selective and tolerant responses along the ventral visual stream in the human can be decoded in single trials. |

|

|

Kreiman et al. Object selectivity of local field potentials and spikes in the macaque inferior temporal cortex. Neuron 2006. PDF

Local field potentials in the macaque inferior temporal cortex show visual selectivity to different objects. |

|

Hung et al. Fast read-out of object identity from macaque inferior temporal cortex. Science 2005. PDF

Single trial rapid decoding of visual information from pseudo-populations of neurons in macaque inferior temporal cortex. |

Su et al. A gene atlas of the mouse and human protein-encoding transcriptomes. PNAS 2004. PDF

Microarray based profiling of gene expression across multiple tissues in mice and humans. |

|

Kreiman. Identification of sparsely distributed clusters of cis-regulatory elements in sets of co-expressed genes. Nucleic Acids Research 2004. PDF

A method for de novo discovery of gene regulatory sequences for sets of co-regulated genes. (CISREGUL). |

|

Zirlinger et al. Amygdala-enriched genes identified by microarray technology are restricted to specific amygdaloid sub-nuclei. PNAS 2001. PDF

Microarray technology uncovered gene expression patterns of the different sub-nuclei within the amygdala. |

|

|

Spike sorting software (Spiker)

Extracellular recordings of spikes often capture the activity of multiple neurons in the vicinity of the microwire electrode. Spiker is an unsupervised algorithm to separate the different putative units. |

READ THIS FIRST BEFORE DOWNLOADING FROM THIS WEB SITE: All code is provided as is. You are welcome to send email to the main authors with questions and queries about the code. Note that due to the volume and nature of these emails, in most cases, we will not be able to answer them. Our intent in providing the code is to push the frontiers of science, help people reproduce results and improve upon them. However, we are not a software development company and we lack the resources to provide technical support. Note also that you are responsible for the use and misuse of the code provided here. Downloading any material from this web site implies your agreement with the rules specified here.

Top